Image captioning for the blind

Participated in the Image Captioning for the Blind challenge by VIzWiz and achieved an overall average BLEU score of 0.2.

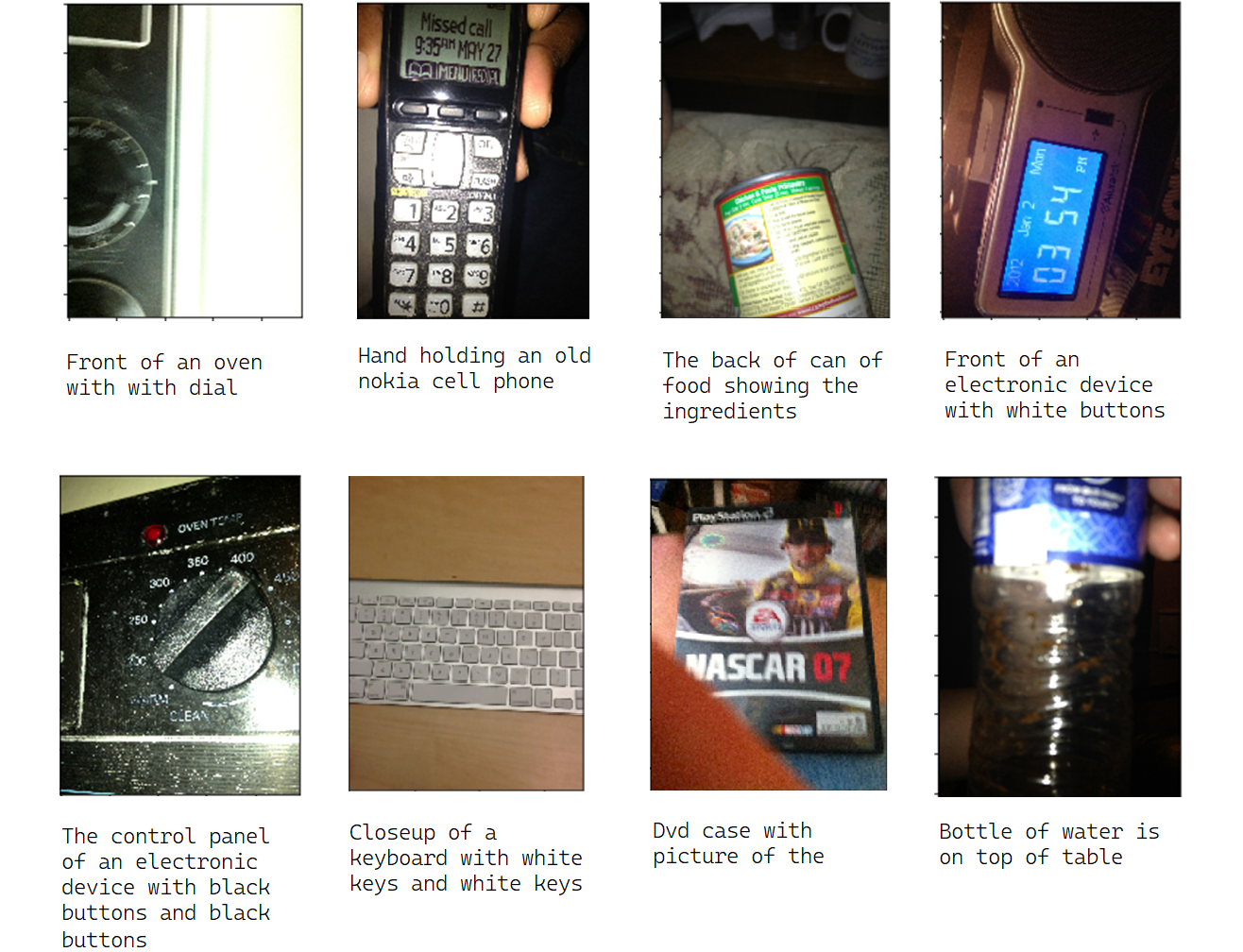

Given an image, the task was to predict an accurate caption. The dataset of VizWiz-Captions, consists of 39,181 images originating from people who are blind that are each paired with 5 captions. Many pictures naturally have clarity issues making captioning them accurately trickier.

To convert every image into a fixed size vector which can then be fed as input to the neural network, I used the concept of transfer learning by using the InceptionV3 model (Convolutional Neural Network).

The model is trained on the Imagenet dataset and the last softmax layer (which performs the classification) is removed to extract the bottle neck features which are used for training on the Model with an LSTM Layer which processes the sequence input (partial captions) .

References:

- A Neural Image Caption Generator

- Role of Recurrent Neural Networks (RNNs) in an Image Caption Generator

Github Link

Some Results