Image captioning for the blind

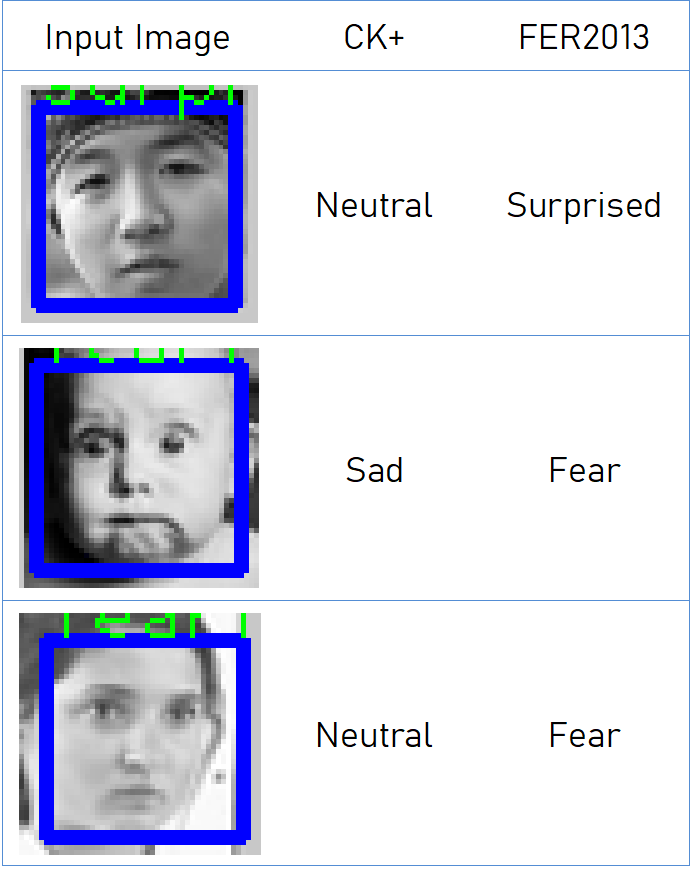

Tested a novel facial expression recognition method, as presented by IEEE, on facial images with unconstrained poses and illumination from the FERC 2013 Dataset. An expectation– maximization algorithm is developed to reliably estimate the emotion labels.

To address the recognition of multi-modal ‘wild’ expressions, a new Deep Locality-Preserving Convolutional Neural Network (DLP-CNN) has been used to enhance the discriminative power of deep features by preserving the locality closeness while maximizing the inter-class scatter. A comparison has been made with the lab-constrained images from the CK+ Dataset.

Achieved a training accuracy of 66.3% on the FER-2013 Dataset and 72% on CK+ Dataset(unconstrained emotion data) after training for 20 epochs.

Observations:

- Tthe model trained on the FER13 dataset is more receptive to not explicitly vivid expressions. This is well explained by the fact that, CK+ itself, has posed emotions, which are comparatively vivid and unambiguous. However, FER13 being more diverse, the accuracy of this model on “wild” emotions is distinctly higher.

References:

Some Results